Modern agent demos look impressive until you load them with real work.

Real constraints. Real ambiguity. Real environments.

That’s when most agents collapse.

And the failure is not stylistic or accidental. It is structural.

The architecture itself is missing something essential. This opening post explains why.

1. The Hidden Cost of Ungrounded Reasoning

The earliest wave of agentic systems relied on a simple idea: Let the model think step-by-step, and it will reason more reliably.

Chain-of-Thought (CoT) became the default mental model.

It looked rational. It sounded rational. It often wasn’t.

CoT has a quiet but devastating flaw:

It reasons entirely inside its own mind.

No external feedback.

No environmental correction.

No grounding.

The moment a CoT trace introduces a false assumption, the rest of the reasoning inherits the lie.

In empirical evaluations:

- In empirical evaluations on the FEVER dataset, over 56% of CoT traces contain hallucinated facts.

- The model continues confidently, building on fabricated foundations.

- There is no mechanism to notice the mistake, let alone correct it.

This is the first architectural blind spot:

Reasoning without observation drifts.

An agent that never checks its assumptions eventually becomes certain about things that were never true.

And scale doesn’t save it. Larger models hallucinate less, but they hallucinate with greater confidence.

When the environment doesn’t push back, the mind drifts toward coherence, not correctness.

2. Why Tool-Using Agents Still Fail

If CoT is “thought without grounding,” early tool-use agents became “action without strategy.” In academic circles, this is often referenced as the Act-Only baseline.

The next generation assumed: Give the model tools, and let it figure out how to use them.

Search. APIs. Databases. Calculators.

The intuition made sense. The results didn’t.

Tools alone don’t create intelligence. In fact, they expose the absence of it.

Act-only agents suffer from three predictable failure modes:

1. Repetition loops

The agent keeps repeating the same tool call, expecting the environment to change.

2. Goal amnesia

Without internal planning, the agent loses track of the original objective.

3. Shallow exploration

The agent uses tools reactively, not strategically. It cannot decompose problems or form subgoals.

The architecture is missing something again:

Action without reasoning leads to thrashing.

These systems aren’t “agents.” They are unguided interfaces wrapped around a language model.

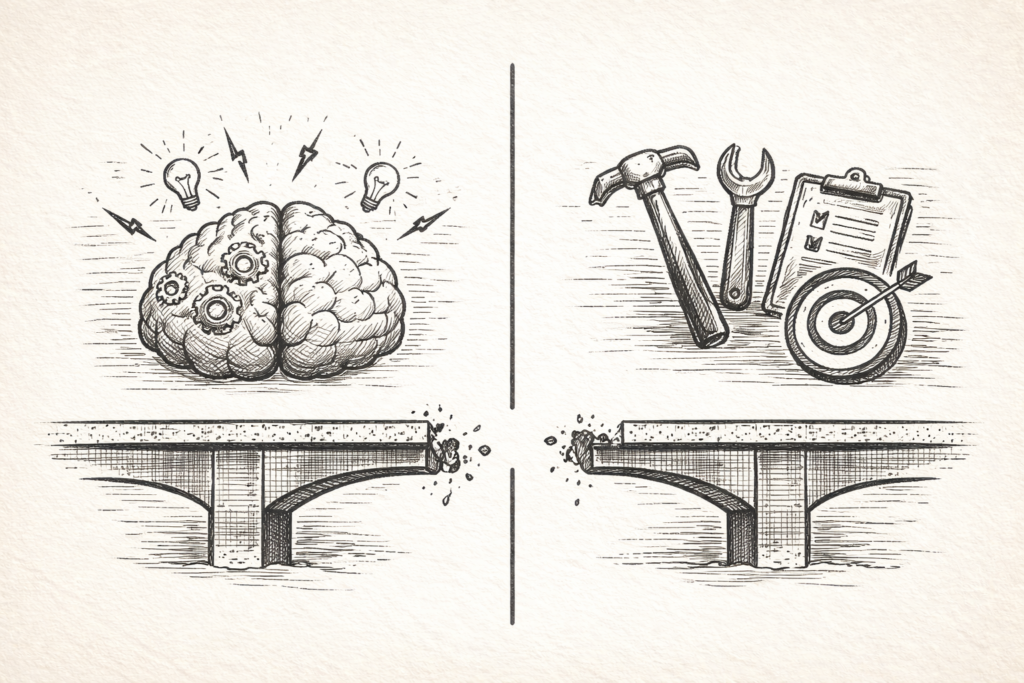

3. The Architecture Both Camps Missed

Most failures in early agent design came from solving the wrong half of the problem.

CoT:

- Excellent at producing structured reasoning

- Terrible at validating it

Act-only agents:

- Excellent at interacting with tools

- Terrible at deciding which tools to use or why

Both systems were incomplete reflections of intelligence.

Human cognition doesn’t separate reasoning from action. It blends them.

We think. We act. We observe. We revise. We try again.

It’s a loop, not a monologue.

The architecture missing from early agents was not more clever thoughts or more powerful tools.

It was the connection between them.

Intelligence is not an internal process. It is a negotiation between mind and environment.

4. A Tale of Two Failures (Mini Traces)

CoT Failure Trace (FEVER)

Thought: The claim says "Marie Curie wrote the book On War."

Thought: On War is associated with military theory, so Marie Curie may have written a scientific version.

Thought: Therefore the claim is likely true, as the two concepts are related.

Final Answer: SUPPORTSEvery step sounds rational.

Every step is wrong.

There was never an opportunity to check the claim.

Act-Only Failure Trace (HotpotQA)

Action: search("Bilbo actor birthplace")

Observation: Returned unrelated article about fictional characters.

Action: search("actor who played Bilbo born")

Observation: No relevant results.

Action: search("Bilbo Baggins actor birth city")

Observation: Still irrelevant.

Final Answer: None.The agent is moving. But it never knows why a search fails, or how to reformulate it.

Both failures point to the same architectural absence:

A system without a feedback loop cannot learn from its own actions.

5. Foreshadow: The Bridge Between Thought and Action

The breakthrough in agent architecture didn’t come from better prompting or bigger models.

It came from reintroducing something fundamental:

Thoughts that guide actions.

Actions that update thoughts.

A loop that persists until the environment yields clarity.

This is the beginning of ReAct: A system that doesn’t reason before acting, or act without thinking — but one that reasons inside the environment, step by step.

The next post explains that architecture in detail:

- why ReAct eliminates hallucination

- how it maintains intentionality

- how it scales to long-horizon tasks

- why it became the foundation of modern multi-agent orchestration frameworks

For now, remember the lesson:

Reasoning without grounding drifts.

Action without reasoning leads to thrashing.

Agency emerges only when the two are woven together.