The Problem With Agent Evaluation

Most conversations about AI agents focus on capabilities.

Can it browse the web? Can it write code? Can it use tools?

These questions matter. But they miss the architecture.

I've spent twelve years building distributed systems that had to survive production—systems where a subtle design flaw at 2am becomes an incident at 3am. That experience taught me something: capability without structure is fragile.

An agent that can do impressive things in a demo often can't do reliable things under load. The difference isn't the model. It's the architecture beneath it.

So when I evaluate any agent system—whether it's a paper, a framework, or something I'm building—I ask four questions. Always the same four. In the same order.

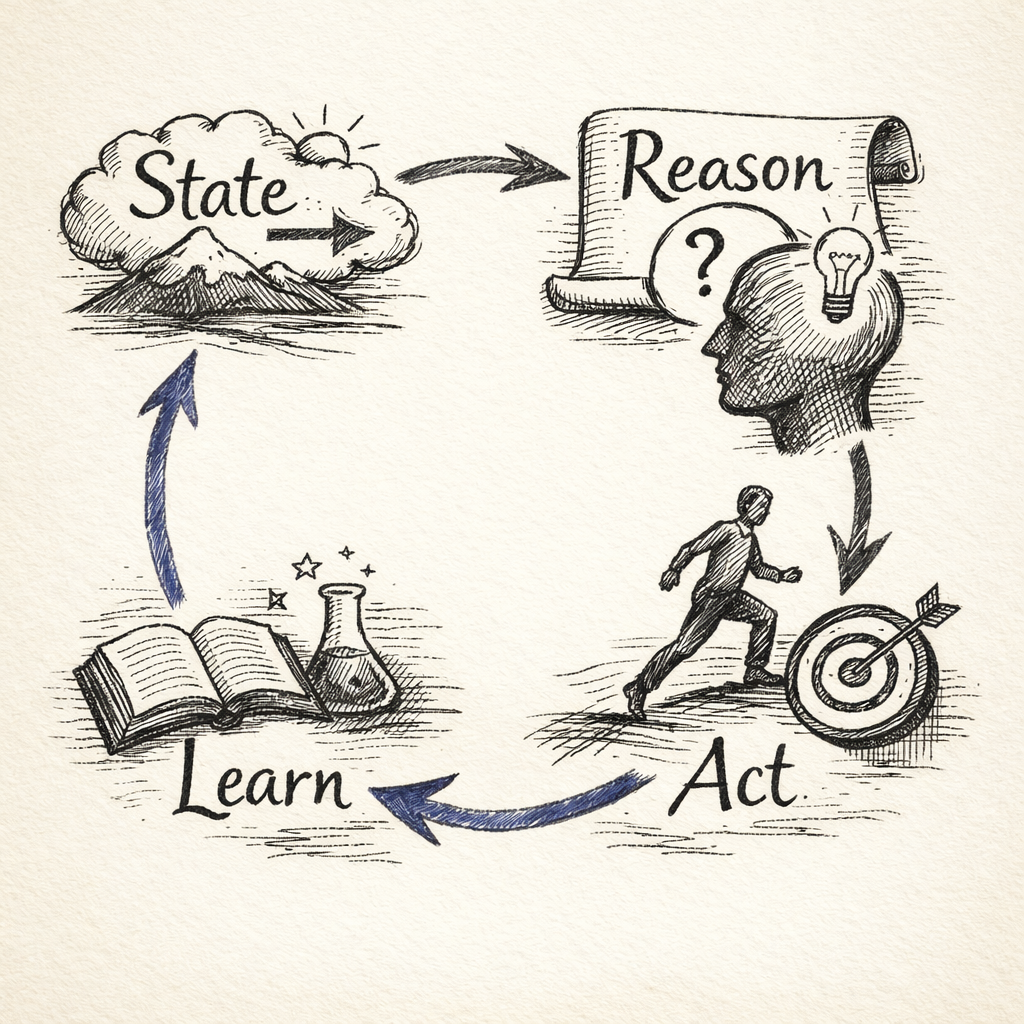

I call this the SRAL framework: State, Reason, Act, Learn.

I've formalized this framework in a paper: SRAL: A Framework for Evaluating Agentic AI Architectures. This post is an accessible introduction to the core ideas.

Why "State" and Not "Sense"

If you've encountered similar frameworks, you might have seen "Sense-Reason-Act-Learn"—particularly in physical AI and robotics contexts. That framing makes sense for embodied systems with cameras, LiDAR, and continuous sensor streams.

But for LLM-based software agents, sensing isn't the problem. These agents receive input just fine—user messages, API responses, tool outputs. What they lack is persistent state.

That's why SRAL starts with State, not Sense:

- Sense implies continuous perception—a stream of input the agent receives.

- State implies constructed knowledge—an explicit world-model the agent builds, maintains, and manages.

This distinction matters because most agent failures trace back to state problems, not perception problems. The agent didn't fail to receive information. It failed to remember it.

The Four Questions

1. State: What Does It Remember?

Every agent operates from some understanding of its situation. The question is: where does that understanding live, and how stable is it?

State includes:

- What the agent knows about the task

- What it remembers from previous steps

- What context it carries forward

Most agents rely on the model's context window as their only memory. This works until it doesn't. Context windows have limits. When they fill up, information gets compressed or truncated. The agent then reasons from incomplete state—often without knowing anything is missing.

The architectural question: Is state explicit and managed, or implicit and fragile?

Agents with explicit state architecture—external memory, structured context, deliberate persistence—handle long-horizon tasks. Agents that treat state as an afterthought collapse under complexity.

This mirrors something true about human organizations. A team that doesn't document its decisions eventually forgets why it made them. It relitigates the same debates, makes contradictory commitments, loses institutional memory. State isn't a technical detail. It's the foundation of coherent identity over time.

2. Reason: How Does It Decide?

Reasoning is how the agent moves from "here's what I know" to "here's what I'll do."

This includes:

- Goal decomposition

- Planning and replanning

- Handling exceptions

- Choosing between options

The quality of reasoning depends entirely on the quality of state. If the agent has forgotten a constraint from step 2, its reasoning at step 7 will be confident and wrong.

The architectural question: Is reasoning grounded in reality, or floating in abstraction?

Chain-of-Thought reasoning looks rational. But without environmental feedback, it drifts. The model invents facts, builds on them, and arrives at conclusions that sound coherent but aren't true. ReAct solved this by interleaving reasoning with action—forcing the model to check its thoughts against the world.

3. Act: How Does It Affect the World?

Action is where the agent meets reality. Tools, APIs, environments, users.

This includes:

- What tools are available

- How tool calls are structured

- How errors are handled

- What feedback the environment provides

Tools don't make agents intelligent. They expose how intelligent the agent already is. A model that can't reason well will use tools poorly—calling the same search repeatedly, misinterpreting results, failing to recover from errors.

The architectural question: Does action inform reasoning, or just execute it?

The best architectures create a loop: action produces observation, observation updates state, updated state improves the next reasoning step. Without this loop, agents don't learn from their own behavior.

4. Learn: How Does It Improve?

This is the weakest component in most agent systems.

Learning includes:

- Incorporating feedback from actions

- Adjusting strategy based on outcomes

- Persisting improvements across sessions

Most agents don't learn at all. Each conversation starts fresh. The model doesn't remember what worked yesterday or what failed an hour ago.

Some agents learn within a session—updating their approach based on observations. Fewer learn across sessions. Almost none learn in ways that persist without retraining.

The architectural question: Is learning architectural, or accidental?

When learning isn't designed into the system, agents repeat the same mistakes. They can't transfer success from one task to similar tasks. They remain perpetually beginners.

Why This Order Matters

SRAL isn't just a checklist. The order reflects dependencies.

Reason depends on State. You can't think clearly about a situation you've forgotten.

Act depends on Reason. Tools without strategy produce thrashing, not progress.

Learn depends on all three. You can only improve what you can observe, and you can only observe through the loop of reasoning, acting, and updating state.

When an agent fails, I trace backward through SRAL. The visible failure is usually in Act or Reason. The root cause is usually in State.

Using SRAL

When I read a paper, I map it to SRAL. Which components does it strengthen? Which does it ignore? Where are the gaps the authors don't acknowledge?

When I evaluate a framework, I ask: does this help me manage State explicitly? Does it support the Reason-Act loop? Does it enable any form of Learn?

When I build, I design each component deliberately. State architecture comes first. Reasoning patterns come second. Tool design comes third. Learning mechanisms—if I can manage them—come last.

The Formal Framework

I've written a full paper formalizing SRAL, including:

- Detailed definitions of each component

- Comparison to OODA, ReAct, and other frameworks

- Failure tracing methodology

- Design principles for agent architects

You can read the full paper here: SRAL: A Framework for Evaluating Agentic AI Architectures

Citation:

Sharan, A. (2025). SRAL: A Framework for Evaluating Agentic AI Architectures. Zenodo. https://doi.org/10.5281/zenodo.18049753

Conclusion

This is how I've started seeing agent systems. Not as capabilities to be impressed by, but as architectures to be evaluated.

The hype cycle rewards capability. But capability fades as quickly as the next model release. Architecture endures. The teams that understand this—that design for structure rather than chase features—will build the agents that actually work.

The model is not the agent. The architecture is.