Turning architectural gaps into engineering patterns

The Gap Between Demo and Production

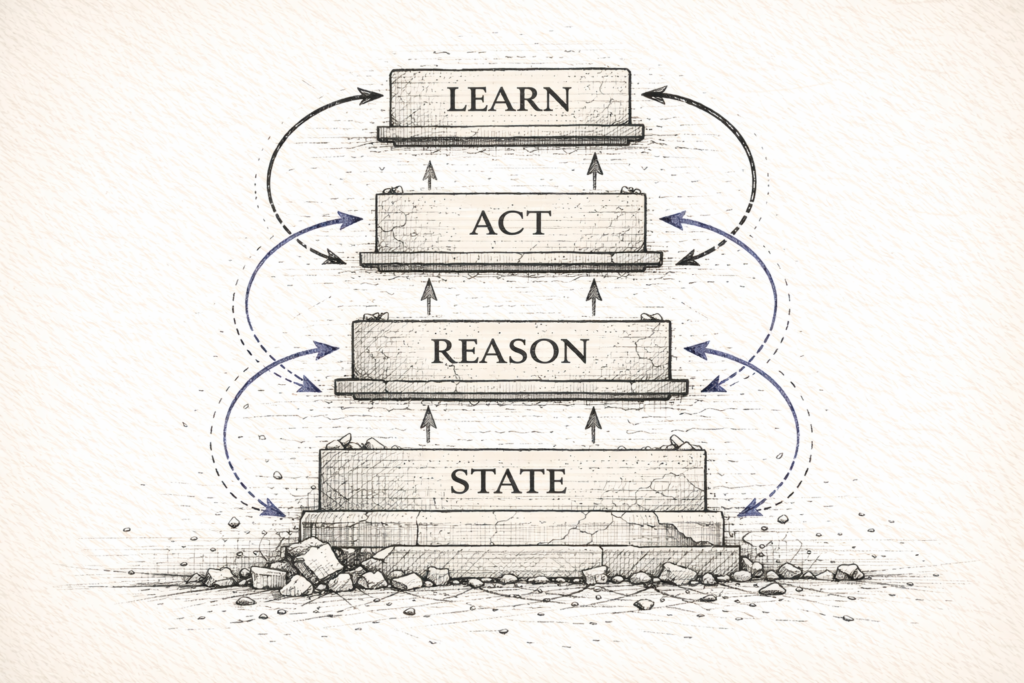

Last week I evaluated LangChain's architecture using SRAL. The scorecard revealed something uncomfortable:

- State: ⚠️ Weak-to-Moderate - Memory optional, context-window dependent

- Reason: ⚠️ Moderate - ReAct exists, verification doesn't

- Act: ✅ Moderate-to-Strong - Excellent primitives

- Learn: ❌ Absent - No learning mechanisms

LangChain isn't broken. It's powerful primitives without enforced discipline.

The evaluation told me what's missing. This post tells you what to do about it.

Scope note: I’m using LangChain + LangGraph as the runtime. LangChain provides the primitives; LangGraph is where state, control flow, and enforcement become architectural. Most of the production patterns below live at the LangGraph layer, by design.

These are the patterns I use when building with LangChain. Not workarounds—architectural patterns that supply the discipline the framework assumes you'll provide. Think of this as the production hardening layer you add on top of excellent primitives.

The question isn't "should you use LangChain?" If you're building agents, you probably should. The question is: how do you prevent the demo-to-production gap from swallowing your reliability?

1. State: Making Memory Architectural

What Breaks

LangChain treats memory as optional. The docs say: "chains can be built without it." Most examples show conversation history managed in-context. When context fills up, you're in reactive mode—trimming, summarizing, hoping.

This breaks in production.

Long-horizon tasks overflow context. Constraints from step 3 vanish by step 12. The agent contradicts itself and can't explain why. The reasoning, given what it remembered, was sound. The failure wasn't in reasoning. It was in state management.

I've seen this pattern in three production deployments. Each time, the symptoms appeared in output quality. The root cause lived in state architecture.

The Blind Spot

Most teams assume context windows are memory. They're not. Context windows are working memory—temporary, fragile, first-in-first-out. When you need long-term memory, persistent state, or critical information that must never truncate, context windows fail you.

The blind spot: conflating perception with persistence. The agent receives input continuously. But without explicit state architecture, nothing persists beyond the context limit.

The Architectural Truth

State must be first-class, not incidental.

Three patterns make this real:

Pattern 1: Persistent State from Day 1

Note: Code snippets are illustrative and intentionally simplified to highlight architectural patterns. They are not drop-in examples.

Don't wait until context overflows. Use external state management from the start:

from langgraph.checkpoint.postgres import PostgresSaver

# Initialize with persistent checkpointing

checkpointer = PostgresSaver.from_conn_string("postgresql://...")

# Build graph with state persistence

graph = StateGraph(AgentState)

graph.add_node("agent", agent_node)

graph.compile(checkpointer=checkpointer)State survives context limits. Long conversations don't degrade. You can resume from any checkpoint. Recovery from failures becomes architectural, not manual.

Pattern 2: Structured State Objects

Don't dump everything into conversation history. Design explicit state schemas:

from typing import TypedDict, Annotated

from operator import add

class AgentState(TypedDict):

# Conversation context

messages: Annotated[list, add]

# Task state (persists across context limits)

requirements: dict # Never truncate these

constraints: list # Critical rules

# Progress tracking

completed_steps: list

current_phase: str

# Decision context

tool_results: dict

verified_facts: dictCritical information lives in typed fields, not free text. You control what persists. Trimming conversation history doesn't lose constraints. The agent always knows its requirements.

This mirrors how distributed systems separate transaction logs from state machines. The log can be truncated. The state machine cannot.

Pattern 3: Pinned Context

For absolutely critical information that must never truncate:

import json

from langchain_core.messages import SystemMessage

def create_system_message(state: AgentState) -> SystemMessage:

requirements = state.get("requirements", {})

constraints = state.get("constraints", [])

pinned_context = f"""

REQUIREMENTS (Never forget these):

{json.dumps(requirements, indent=2)}

CONSTRAINTS (Must satisfy all):

{chr(10).join(f'- {c}' for c in constraints)}

"""

return SystemMessage(content=pinned_context)

# Inject at every step (append/prefix)

graph.add_node("inject_context", lambda state: {

"messages": [create_system_message(state)]

})Requirements regenerate from state at every step. Context truncation can't remove them. The agent always operates from complete constraints.

Architectural principle: Reasoning depth cannot exceed state stability.

Implementation Checklist

- [ ] Use

PostgresSaveror equivalent persistent checkpointer - [ ] Define explicit

AgentStateTypedDict with critical fields - [ ] Separate task state (requirements, constraints) from conversation history

- [ ] Implement pinned context regeneration from state

- [ ] Test with conversations exceeding 50+ turns

- [ ] Verify constraints survive context trimming

2. Reason: Forcing Verification Loops

What Breaks

LangChain permits reasoning chains that never verify assumptions. You can build multi-step flows where each step builds on the previous—with zero grounding. The composability that makes prototyping easy makes hallucination easy too.

ReAct patterns exist in agents. But chains can skip them entirely. The framework doesn't enforce verification. It permits it.

The Blind Spot

Teams mistake composability for reliability. If components chain together smoothly, we assume the chain is sound. But a smooth chain can be smoothly wrong.

The blind spot: confusing syntactic composition (A → B → C) with semantic grounding (A is true, therefore B is true, therefore C is true).

The Architectural Truth

Verification cannot be optional.

Three patterns enforce it:

Pattern 1: Mandatory Verification Steps

Build verification into the graph structure:

from langgraph.prebuilt import ToolNode

# Reasoning must be followed by verification

graph.add_node("reason", reasoning_node)

graph.add_node("verify", verification_node) # Default path in production graphs

graph.add_node("act", tool_node)

# Enforce sequence: reason → verify → act

graph.add_edge("reason", "verify")

graph.add_edge("verify", "act")

def verification_node(state: AgentState):

"""Extract claims from reasoning, validate against state/tools"""

last_reasoning = state["messages"][-1].content

claims = extract_claims(last_reasoning)

verified = {}

for claim in claims:

# Check against known state

if claim in state.get("verified_facts", {}):

verified[claim] = state["verified_facts"][claim]

continue

# Otherwise, verify with tools/retrieval

result = verify_claim(claim, state)

verified[claim] = result

return {"verified_facts": verified}The graph structure enforces verification. Reasoning can't lead directly to action. Every claim passes through validation. Unverified assumptions surface as missing verification results.

This is architectural enforcement, not procedural request. In production graphs, verification is the default route. If you allow skipping it, treat that as an explicit risk decision.

Pattern 2: Assumption Tracking

Make the agent track what it knows versus what it assumes:

class ReasoningState(TypedDict):

assumptions: list[str] # Unverified claims

verified_facts: dict[str, any] # Proven truths

confidence_level: str # "high" if few assumptions

def reasoning_node(state: AgentState):

"""Separate facts from assumptions"""

response = model.invoke(state["messages"])

parsed = parse_reasoning(response.content)

return {

"assumptions": parsed["assumptions"],

"verified_facts": parsed["verified_facts"],

"confidence_level": "low" if len(parsed["assumptions"]) > 3 else "high"

}

def should_verify(state: AgentState) -> bool:

"""Require verification if confidence low or assumptions high"""

return (

state.get("confidence_level") == "low" or

len(state.get("assumptions", [])) > 2

)

# Conditional verification based on reasoning quality

graph.add_conditional_edges(

"reason",

should_verify,

{True: "verify", False: "act"}

)The agent knows what it doesn't know. High assumption count triggers verification. You can inspect reasoning quality before acting. Hallucination risk becomes visible.

Pattern 3: Tool-Enforced Grounding

Don't just call tools—require the agent to use results:

from langchain_core.tools import tool

@tool

def web_search(query: str) -> dict:

"""Search the web and REQUIRE citation in next reasoning step"""

results = actual_search(query)

return {

"results": results,

"citation_required": True, # Flag for verification

"query": query

}

def verify_tool_usage(state: AgentState):

"""Check that tool outputs were actually cited/used in the next reasoning step"""

last_tool_calls = state.get("tool_results", [])

last_reasoning = [m for m in state["messages"] if m.type == "ai"][-1]

for tool_call in last_tool_calls:

if tool_call.get("citation_required"):

if tool_call["query"] not in last_reasoning.content:

return {

"error": f"Tool result from '{tool_call['query']}' not used"

}

return {}Tools become checkpoints, not just utilities. The agent must cite sources. Reasoning that ignores tool results gets caught. Grounding is verified, not assumed.

Architectural principle: Unverified reasoning is how demos work and production fails.

Implementation Checklist

- [ ] Add explicit verification nodes in graph

- [ ] Track assumptions separately from verified facts

- [ ] Implement confidence scoring based on assumption count

- [ ] Require citation of tool results in reasoning

- [ ] Test with deliberately ambiguous queries

- [ ] Verify ungrounded reasoning gets caught

3. Act: Closing the Feedback Loop

What Breaks

LangChain provides excellent tool primitives. But feedback loops are optional. You can execute tools and ignore results. The agent proceeds with its plan regardless of what actually happened.

The pattern repeats: tool called, result returned, agent continues as if nothing changed. This works in demos where environments are stable. It fails in production where environments respond.

The Blind Spot

Teams treat action as execution, not observation. The tool returned a result—mission accomplished. But did the agent incorporate that result into its understanding? Did it update its world-model based on what happened?

The blind spot: conflating tool invocation with environmental feedback. The agent acted. But did it observe?

The Architectural Truth

Observation must update state, not just log.

Three patterns close the loop:

Pattern 1: Observation as State Update

Make tool results update state, not just append to messages:

def tool_execution_node(state: AgentState):

"""Execute tools AND update state from results"""

messages = state["messages"]

last_message = messages[-1]

tool_results = {}

errors = []

for tool_call in last_message.tool_calls:

try:

result = execute_tool(tool_call)

tool_results[tool_call["name"]] = result

# Update state based on result type

if tool_call["name"] == "get_requirements":

state["requirements"] = result

elif tool_call["name"] == "validate_constraint":

state["verified_facts"][tool_call["args"]["constraint"]] = result

except Exception as e:

errors.append({

"tool": tool_call["name"],

"error": str(e)

})

return {

"messages": [ToolMessage(...)],

"tool_results": tool_results,

"errors": errors,

"last_action_success": len(errors) == 0

}State becomes the single source of truth. Tool results don't just live in conversation history. The agent's world-model updates from observations. Decisions are based on current state, not stale assumptions.

This mirrors event sourcing in distributed systems. Events append to the log. But state machines process events and update their internal representation. Both are necessary.

Pattern 2: Retry Logic with Learning

Don't retry blindly—learn from failures:

from langchain_core.messages import AIMessage

def smart_retry_node(state: AgentState):

"""Retry failed tools with adjusted strategy"""

errors = state.get("errors", [])

if not errors:

return {}

# Analyze failure patterns

failure_summary = summarize_failures(errors)

# Update strategy based on failure type

retry_strategy = AIMessage(content=f"""

Previous attempt failed: {failure_summary}

Adjusted strategy:

- If API rate limit: wait and retry

- If invalid params: check schema and correct

- If unavailable resource: find alternative

Attempting retry with corrections...

""")

return {

"messages": [retry_strategy],

"retry_count": state.get("retry_count", 0) + 1

}

# Conditional retry based on error type

graph.add_conditional_edges(

"tool_execution",

lambda state: state.get("last_action_success", True),

{

True: "reason", # Success → continue

False: "smart_retry" # Failure → analyze and retry

}

)Failures inform strategy. Retries aren't blind repetition. The agent adapts based on error type. Maximum retry count prevents infinite loops.

Pattern 3: Parallel Tool Calls with Dependency Tracking

When using parallel tools, track dependencies:

def parallel_tool_node(state: AgentState):

"""Execute tools in parallel but respect dependencies"""

tool_calls = state["messages"][-1].tool_calls

# Group by dependencies

independent = [tc for tc in tool_calls if not tc.get("depends_on")]

dependent = [tc for tc in tool_calls if tc.get("depends_on")]

# Execute independent calls in parallel

with concurrent.futures.ThreadPoolExecutor() as executor:

independent_results = list(executor.map(execute_tool, independent))

# Execute dependent calls after dependencies complete

dependent_results = []

for tc in dependent:

dep_result = independent_results[tc["depends_on"]]

result = execute_tool(tc, context=dep_result)

dependent_results.append(result)

return {

"tool_results": independent_results + dependent_results,

"execution_order": "parallel_with_dependencies"

}Parallel execution where possible, sequential where necessary. Dependencies are explicit, not implicit. Race conditions can't corrupt state.

Architectural principle: Actions without observations produce thrashing, not progress.

Implementation Checklist

- [ ] Tool results update

AgentState, not just messages - [ ] Implement failure analysis in retry logic

- [ ] Track tool success/failure in state

- [ ] Add dependency tracking for parallel calls

- [ ] Test with deliberately failing tools

- [ ] Verify state updates correctly from observations

4. Learn: Building Improvement Mechanisms

What Breaks

LangChain has no learning. Persistence exists—conversations can be stored. But stored history isn't mined for patterns. The agent doesn't extract "this worked, that failed, try this instead."

Each session starts fresh. Mistakes repeat. Success doesn't transfer.

This isn't a limitation of the model. It's a limitation of the architecture. The framework provides no mechanism for improvement.

The Blind Spot

Teams conflate model capability with system learning. The model improves through training. But the system—the specific agent architecture you've built—doesn't improve through use.

The blind spot: assuming that because LLMs can generalize, your agent system will too. It won't. Not without explicit learning architecture.

The Architectural Truth

Learning requires architecture, not hope.

Since LangChain won't provide it, you build it yourself. Three patterns:

Pattern 1: Experience Database

Build experience tracking manually:

from datetime import datetime

from langchain.storage import InMemoryStore

experience_store = InMemoryStore()

def record_success(state: AgentState, task_type: str):

"""Log successful patterns for future retrieval"""

success_pattern = {

"task_type": task_type,

"strategy": extract_strategy(state),

"tools_used": list(state.get("tool_results", {}).keys()),

"constraints": state.get("constraints", []),

"outcome": "success",

"timestamp": datetime.now().isoformat()

}

key = f"success_{task_type}_{datetime.now().timestamp()}"

experience_store.mset([(key, success_pattern)])

def retrieve_similar_success(task_type: str) -> list:

"""Find similar successful patterns"""

all_experiences = experience_store.mget([])

similar = [

exp for exp in all_experiences

if exp.get("task_type") == task_type and

exp.get("outcome") == "success"

]

return sorted(similar, key=lambda x: x["timestamp"], reverse=True)[:3]

def reasoning_with_experience(state: AgentState):

"""Incorporate past successes into reasoning"""

task_type = identify_task_type(state)

similar_successes = retrieve_similar_success(task_type)

if similar_successes:

experience_prompt = f"""

Similar tasks succeeded with these strategies:

{json.dumps(similar_successes, indent=2)}

Consider applying similar patterns.

"""

state["messages"].append(SystemMessage(content=experience_prompt))

return stateSuccessful patterns persist across sessions. Similar tasks retrieve relevant experience. The agent doesn't start from zero every time.

Manual, yes. But functional.

Pattern 2: Failure Analysis

Track what doesn't work:

def record_failure(state: AgentState, task_type: str):

"""Log failures to avoid repeating mistakes"""

failure_pattern = {

"task_type": task_type,

"attempted_strategy": extract_strategy(state),

"failure_point": identify_failure_step(state),

"error_message": state.get("errors", []),

"timestamp": datetime.now().isoformat()

}

key = f"failure_{task_type}_{datetime.now().timestamp()}"

experience_store.mset([(key, failure_pattern)])

def check_for_known_failures(state: AgentState) -> bool:

"""Warn if attempting a known-failure pattern"""

current_strategy = extract_strategy(state)

task_type = identify_task_type(state)

past_failures = [

exp for exp in experience_store.mget([])

if exp.get("task_type") == task_type and

exp.get("outcome") == "failure" and

exp.get("attempted_strategy") == current_strategy

]

if past_failures:

warning = f"""

WARNING: This strategy failed {len(past_failures)} times previously.

Consider alternative approach.

"""

state["messages"].append(SystemMessage(content=warning))

return True

return FalseFailures become knowledge. Known-bad strategies trigger warnings. The agent avoids repeating mistakes. Learns negatively even without positive improvement.

Pattern 3: Strategy Refinement

Compare attempts and extract improvements:

def analyze_strategy_evolution(task_type: str):

"""Identify which strategies improved over time"""

experiences = [

exp for exp in experience_store.mget([])

if exp.get("task_type") == task_type

]

experiences.sort(key=lambda x: x["timestamp"])

# Track success rate over time

success_rates = []

window_size = 5

for i in range(len(experiences) - window_size):

window = experiences[i:i+window_size]

successes = sum(1 for exp in window if exp["outcome"] == "success")

success_rates.append(successes / window_size)

if success_rates and success_rates[-1] > success_rates[0]:

return "improving"

return "degrading"

def initialize_with_learning(task_type: str):

"""Start with best-known strategy"""

evolution = analyze_strategy_evolution(task_type)

if evolution == "improving":

recent_successes = retrieve_similar_success(task_type)

return f"Recent attempts improved. Use these patterns: {recent_successes}"

return "Recent attempts degraded. Reconsider approach."You're manually building what Learn should do automatically. Success rates tracked. Strategies evolve. The agent gets better over time.

Architectural principle: Without improvement mechanisms, agents remain perpetual novices.

Implementation Checklist

- [ ] Implement experience store (InMemoryStore or PostgreSQL)

- [ ] Record successful patterns with task type + strategy

- [ ] Record failures with error context

- [ ] Retrieve similar experiences at reasoning step

- [ ] Add warnings for known-failure patterns

- [ ] Track success rates over time

- [ ] Initialize new tasks with best-known strategies

5. The Production Stack

These patterns compose into a production-ready architecture:

The following is a conceptual wiring diagram showing how SRAL maps to a LangGraph-style runtime.

from langgraph.graph import StateGraph

from langgraph.checkpoint.postgres import PostgresSaver

from langgraph.prebuilt import ToolNode

# 1. STATE: Structured state + persistence

class ProductionAgentState(TypedDict):

messages: Annotated[list, add]

requirements: dict

constraints: list

verified_facts: dict

tool_results: dict

errors: list

experience: list

# 2. Initialize with checkpointing

checkpointer = PostgresSaver.from_conn_string("postgresql://...")

graph = StateGraph(ProductionAgentState)

# 3. REASON: Verification enforced

graph.add_node("reason", reasoning_node)

graph.add_node("verify", verification_node)

# 4. ACT: Tools with feedback

graph.add_node("tools", tool_execution_node)

graph.add_node("observe", observation_update_node)

# 5. LEARN: Experience integration

graph.add_node("learn", learning_node)

# Graph structure enforces reliability

graph.add_edge("reason", "verify")

graph.add_edge("verify", "tools")

graph.add_edge("tools", "observe")

graph.add_edge("observe", "learn")

# Conditional retry on failure

graph.add_conditional_edges(

"observe",

lambda s: s.get("last_action_success", True),

{True: "reason", False: "retry"}

)

# Compile with persistence

agent = graph.compile(checkpointer=checkpointer)Test it before trusting it (These are conceptual checks—think of them as invariants you should be able to assert, not literal pytest code):

def test_state_persistence():

"""Verify state survives context overflow"""

state = {"requirements": {"max_cost": 100}}

for i in range(100):

state["messages"].append(f"Message {i}")

assert state["requirements"]["max_cost"] == 100

def test_verification_enforcement():

"""Verify ungrounded reasoning gets caught"""

state = {"messages": [AIMessage(content="The capital of France is Berlin")]}

verified = verification_node(state)

assert "capital of France" in verified["assumptions"]

def test_learning_retrieval():

"""Verify similar experiences are retrieved"""

record_success(state, task_type="data_analysis")

similar = retrieve_similar_success("data_analysis")

assert len(similar) > 06. What This Doesn't Solve

These patterns have limits:

Learning is still manual. You're building the improvement loop yourself, not using a framework primitive. This works but requires maintenance.

Performance overhead. Verification adds latency. Experience retrieval adds calls. The trade-off is reliability versus speed.

Complexity cost. More nodes, more state, more code to maintain. Production hardening isn't free.

Model capability ceiling. Architecture can't fix a weak model. These patterns assume reasonable model capability and add reliability on top.

When these patterns aren't enough:

- Tasks requiring true learning → Consider RL-based approaches

- Real-time low-latency needs → Verification loops add overhead

- Simple demos → This is production hardening, not rapid prototyping

- Budget constraints → More calls = more cost

The trade-off is explicit: reliability versus speed and simplicity. Production demands reliability.

7. The Takeaway

LangChain provides powerful primitives. But primitives aren't architecture.

The SRAL evaluation revealed gaps. These patterns supply what the framework doesn't enforce.

State: External persistence + structured schemas + pinned context

Reason: Verification nodes + assumption tracking + tool grounding

Act: State updates from observations + smart retries + dependency tracking

Learn: Experience stores + failure analysis + strategy evolution

The result: production-ready agents built on LangChain primitives, hardened with architectural discipline.

This is how you build agents that work. Not by choosing a different framework. By adding the discipline the framework assumes you'll supply.

The framework gives you capability. You supply the architecture.

Resources

- SRAL Framework: Paper

- LangChain Evaluation: Full architectural analysis