Author: Aakash Sharan

Published: December 24, 2025

DOI: 10.5281/zenodo.18049753

Abstract

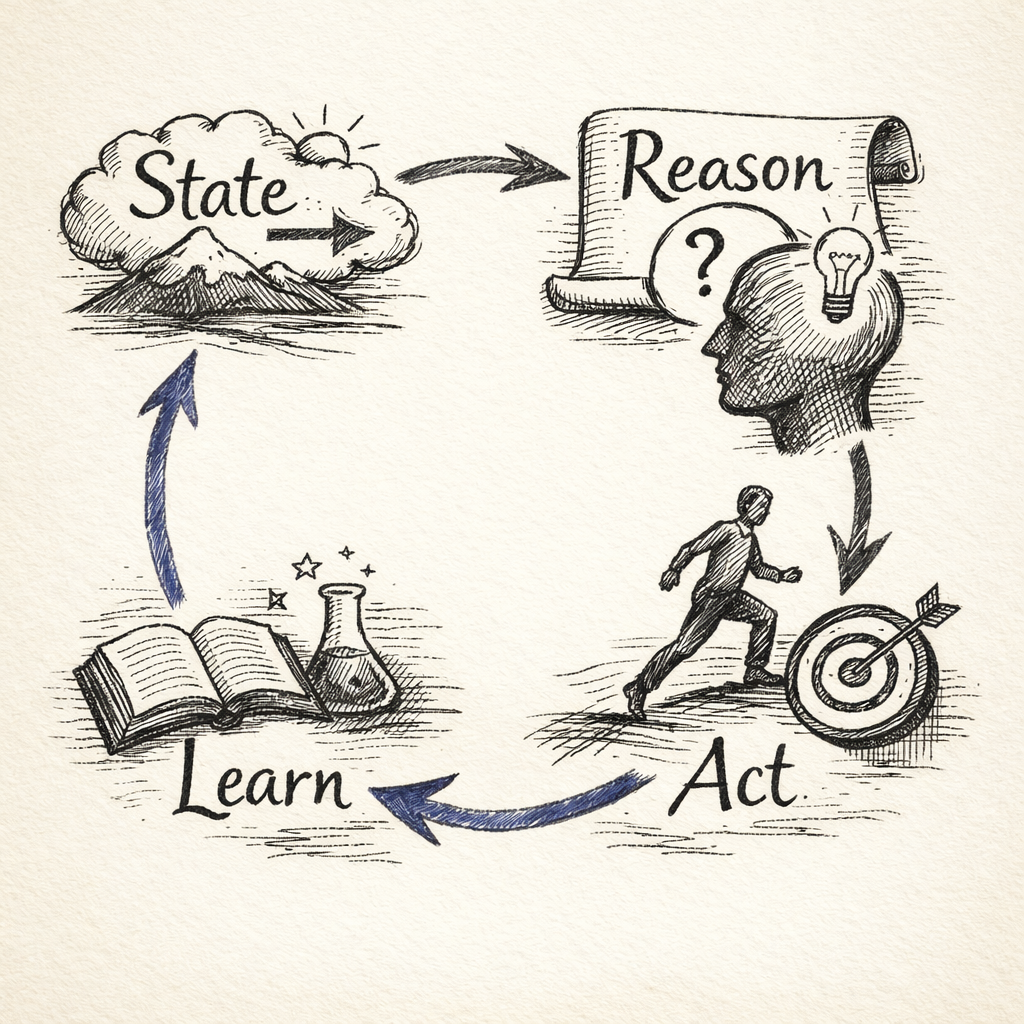

The rapid proliferation of LLM-based agents has produced systems with impressive capabilities but inconsistent reliability. Current discourse focuses on what agents can do—tool use, code generation, web browsing—while neglecting the architectural foundations that determine whether they can do these things reliably. This paper introduces SRAL (State-Reason-Act-Learn), a minimal evaluation framework for reasoning about agent architecture. Unlike perception-oriented loops such as Sense-Reason-Act-Learn or Perceive-Reason-Act, SRAL foregrounds State—the constructed, persistent world-model that agents must explicitly maintain—as the foundational component upon which reasoning, action, and learning depend. We argue that most agent failures trace not to reasoning or action, but to unmanaged state: context window overflow, lost constraints, and forgotten decisions. SRAL provides both a dependency model (State → Reason → Act → Learn) and an evaluation methodology (four architectural questions applied in sequence). We differentiate SRAL from related frameworks including OODA, ReAct, and enterprise agent architectures, and demonstrate its application as an analytical tool for agent system design.

Keywords: agentic AI, agent architecture, LLM agents, state management, evaluation framework

Downloads & Links

- 📄 Download Full Paper (PDF)

- 🔗 Zenodo Repository (DOI: 10.5281/zenodo.18049753)

- 💻 GitHub Repository (evaluation templates & checklist)

- 📝 Blog Post (accessible introduction)

The SRAL Framework

SRAL evaluates agent architectures along four dimensions, in strict dependency order:

State → Reason → Act → Learn

The Four Questions

| Component | Architectural Question |

|---|---|

| State | Is state explicit and managed, or implicit and fragile? |

| Reason | Is reasoning grounded in reality, or floating in abstraction? |

| Act | Does action inform reasoning, or merely execute it? |

| Learn | Is learning architectural, or accidental? |

Why "State" not "Sense"?

Unlike frameworks like Sense-Reason-Act-Learn used in physical AI and robotics, SRAL uses State rather than Sense:

- Sense implies continuous perception—a stream of environmental input

- State implies constructed knowledge—an explicit world-model that must be managed

For LLM-based software agents, the challenge isn't perception—it's persistent state management. Without explicit architecture for state, agents lose context, forget constraints, and make contradictory decisions.

How to Cite

BibTeX

@misc{sharan2025sral,

author = {Sharan, Aakash},

title = {SRAL: A Framework for Evaluating Agentic AI Architectures},

year = {2025},

publisher = {Zenodo},

doi = {10.5281/zenodo.18049753},

url = {https://doi.org/10.5281/zenodo.18049753}

}

APA

Sharan, A. (2025). SRAL: A Framework for Evaluating Agentic AI Architectures. Zenodo. https://doi.org/10.5281/zenodo.18049753

MLA

Sharan, Aakash. "SRAL: A Framework for Evaluating Agentic AI Architectures." Zenodo, 24 Dec. 2025, doi:10.5281/zenodo.18049753.

Contact & Collaboration

I'm interested in collaborations applying SRAL to existing frameworks, empirical validation, and multi-agent extensions.

- 📧 Email: akisharan@gmail.com

- 💼 LinkedIn: www.linkedin.com/in/aakashsharan

- 🐙 GitHub: https://github.com/aakashsharan

- 🌐 Website: aakashsharan.com

© 2025 Aakash Sharan. Licensed under CC-BY 4.0.