Hey there! So we decided to create a Word Count application - a classic MapReduce example. But what the heck is a Word Count application, you ask? It's basically a program that reads data and calculates the most common words. Easy peasy. For example:

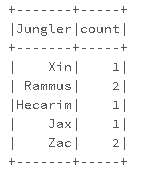

dataDF = sqlContext.createDataFrame([('Jax',), ('Rammus',), ('Zac',), ('Xin', ),

('Hecarim', ), ('Zac', ), ('Rammus', )], ['Jungler'])

For this project, we used Apache Spark 2.0.2 on Databricks Cloud and used A Tale of Two Cities by Charles Dickens from Project Gutenberg as our dataset.

Once we read the dataset, we made the following assumptions to clean the data:

- Words should be counted regardless of capitalization.

- Punctuation should be removed.

- Leading and trailing spaces should be removed.

To make sure we stuck to these assumptions, we created a function called removePunctuation to perform the operations:

from pyspark.sql.functions import regexp_replace, trim, col, lower

def removePunctuation(column):

"""Removes punctuation, changes to lower case, strips leading and trailing spaces.

Args:

column: a sentence

Returns:

Column: a column named 'sentence'

"""

return lower(trim(regexp_replace(column, '[^\w\s]+', ""))).alias('sentence')Once that was done, we defined a function for word counting:

def wordCount(wordsDF):

""" Creates a DataFrame with word counts.

Args:

wordsDF: A DataFrame consisting of one string column called 'Jungler'.

Returns:

DataFrame of a (str, int): containing Jungler and count columns.

"""

return (wordsDF

.groupBy('word')

.count())Before we called the wordCount() function, we addressed two issues:

- We needed to split each line by spaces.

- We needed to filter out empty lines or words.

So we did that like this:

from pyspark.sql.functions import split,explode

twoCitiesWordsDF = (twoCitiesDF

.select(explode(split(twoCitiesDF.sentence, " ")).alias('word'))

.where("word != ''")

)

twoCitiesWordsDF.show(truncate=False)

twoCitiesWordsDFCount = twoCitiesWordsDF.count()

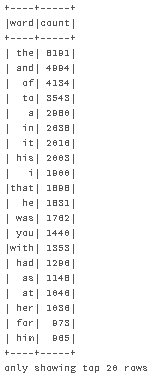

print twoCitiesWordsDFCountAnd voila! Here are the top 20 most common words:

If you're interested, you can find the project on this github repository. Happy word counting!